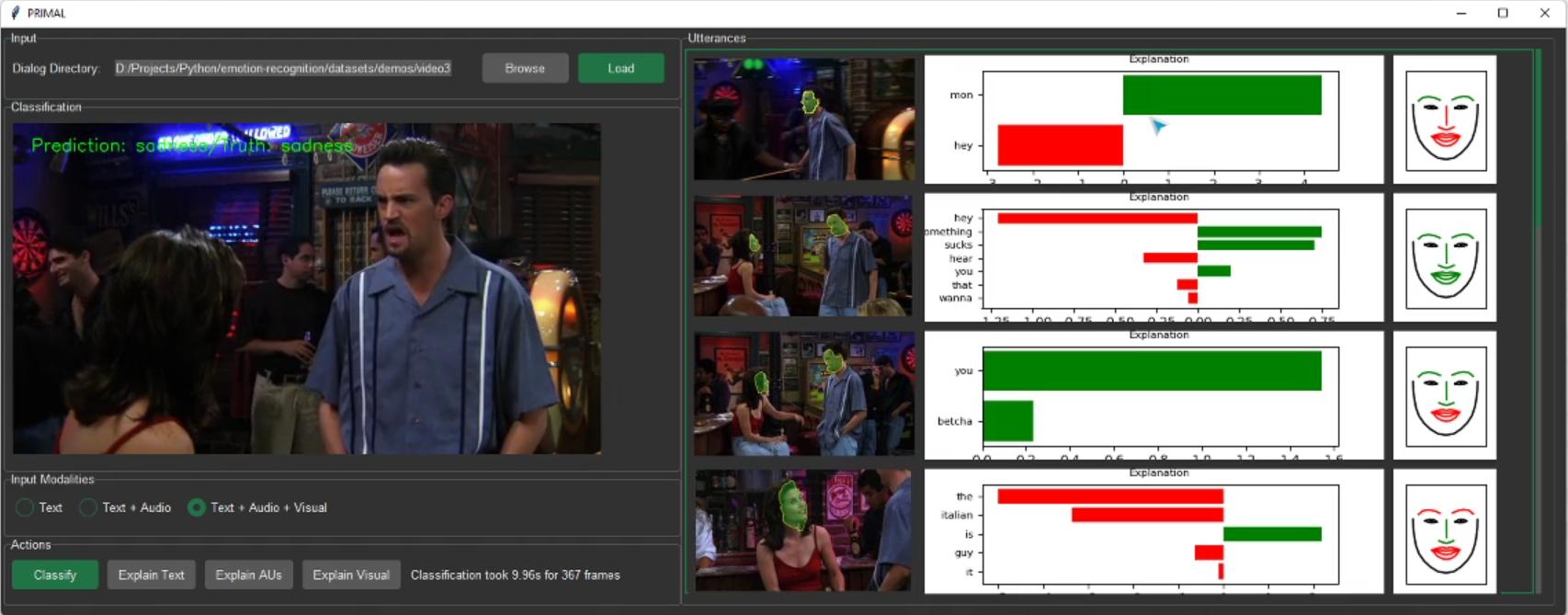

Emotion Recognition in Coversations

Project Details

Exploring the use of novel feature extraction techniques and use them for jluti-modal emotion recognition in multi-speaker conversations. We propose a novel feature fusion technique to combine individual modal representations into a unified utterance-level representation. We also incorporate self-supervision to improve modal representation, cross-modal attention and active learning. We achieve ~5% and ~2% better performance on the MELD and IEMOCAP datasets while using only ~70% and 80% of the data.

Project Information

- Category: ML

- Stack: python, pytorch